Future Image Processing in the RCJ Maze Simulation World

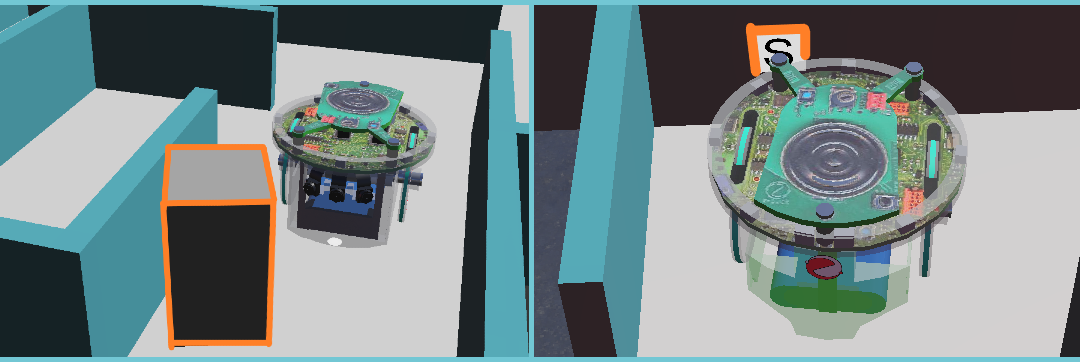

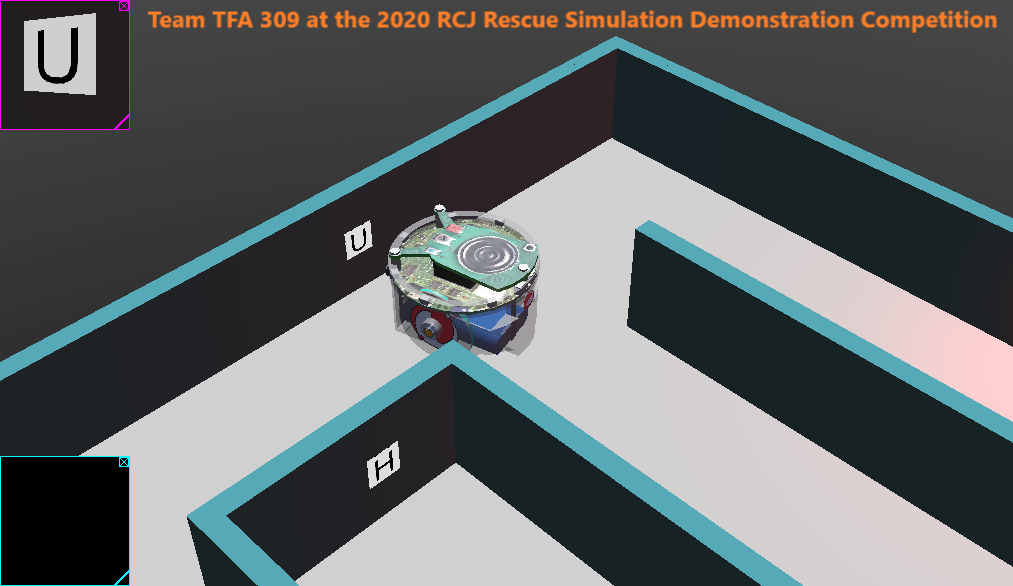

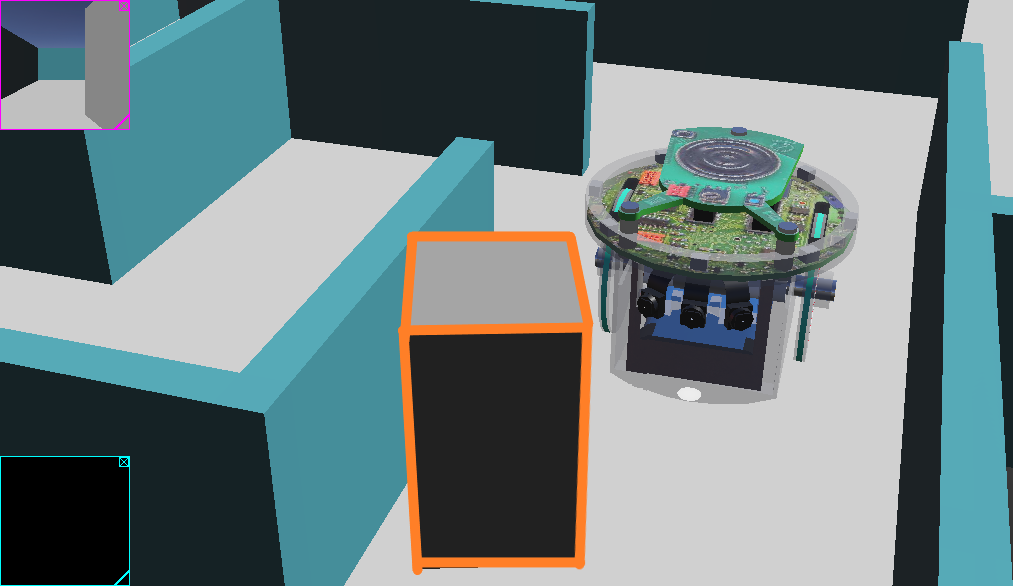

After competing in the RoboCup Junior Rescue Simulation Demonstration Competition in 2020, the TFA Junior Robotics Team realized that there were still many improvements to be made going forward.

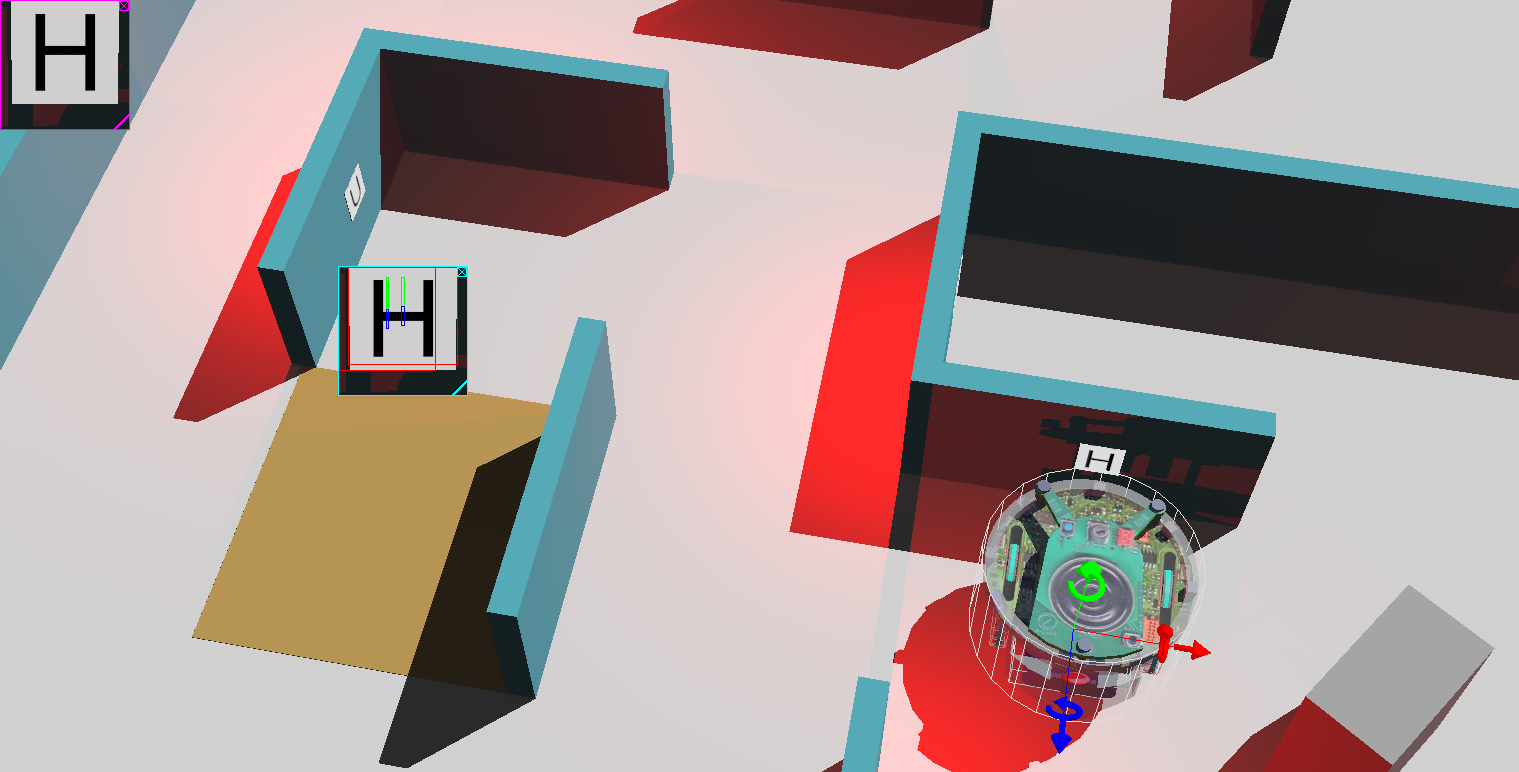

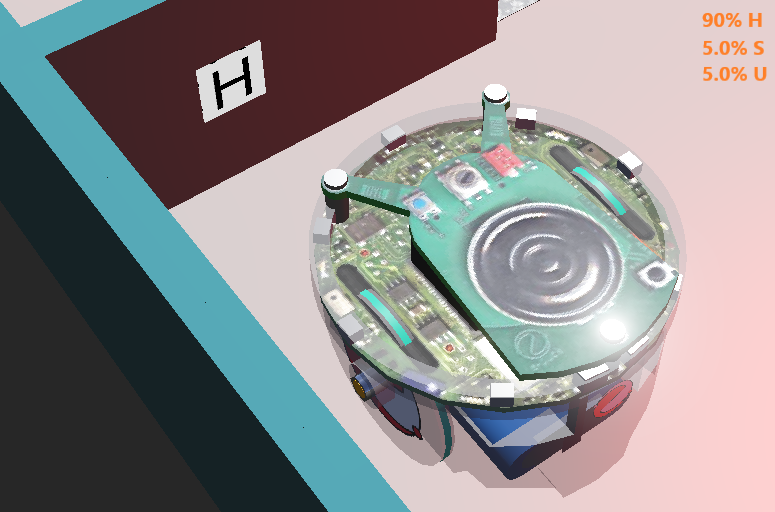

The vision component of the RCJ Rescue Maze Simulation consists of the visual victim detection, along with other detection algorithms. The TFA Junior Robotics team was very successful with Image Processing during the 2020 RoboCup Junior Rescue Simulation Demonstration Competition. However, even with a 100% success rate in victim detection, the team knows that there is a lot to improve on. Improvements include, 1. increasing the speed of visual victim detection, 2. detecting other objects in the world, and 3. improving the accuracy.

The following are some methods that the TFA Junior Robotics Team has analyzed to potentially use in the future.

Object detection

This idea involves finding other objects in the field. This objects could include obstacles, walls, checkpoints, swamps, and/or black holes. By detecting other objects in the field, the TFA team is able to optimize scoring and therefore, score more points. The team is also able to use the camera as a way to cross check with other sensors, and therefore, improve the robustness of the robot.

Using C instead of Python

Another possible implementation would be using C instead of Python to increase processing speed for use on algorithms on an embedded systems in the future. Previously, the TFA Junior Robotics had intended to test the team’s algorithms through the Webots Display, however, the team is now leaning towards writing it’s code through image processing algorithms in C, and extending it to python. This allows the team to possibly not use the OpenCV library, and develop a specialized library for the team itself. It also allows the team to embed image processing algorithms into other devices, in a post pandemic world.

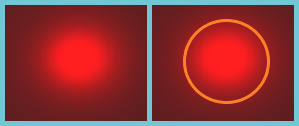

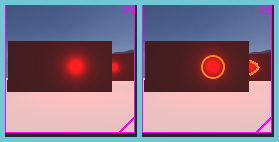

Red Capturing

Something that the TFA Junior Robotics Team attempted during the RCJ Rescue Simulation Demonstration Competition was in detecting the red circles indicating heated victims. Since the placement of the heat sensors were on the sides of the robot, the team thought that they could try detecting heated victims in the front using the three front facing cameras.

If the team is able to do this, then they can possibly be able to head straight towards the heated victim instead of detecting it when the heat sensors do. This results in a faster run, as the victim detection is optimized with more victims “rescued” at a faster pace.

Machine Learning or Dynamic Weighted or Adaptive Letters Tree

Something else that that the TFA Junior Robotics team thought of implementing was a machine learning, dynamically weighted, or adaptive weighting for visual victim detection. Currently, TFA’s victim detection is a long list of “if” statements, but if the team can implement a dynamically weighted algorithm, then it is possible to improve this system.

Essentially, by arranging a points or reward system for each victim detection, the robot can decide which letter is more probable. This can possibly improve the overall victim detection accuracy and robustness.

BMP images in Webots world

Previously, the TFA Junior Robotics Team had also studied BMP image conversion. There is a possibility that the team implements this in the near future. This enables to team to not use the OpenCV library, and instead, design algorithms specific to the needs of the competition. This idea could be an extension of writing in C programming language, or could be done through Python.

Another possibly would be in completely getting rid of images, and instead using the color channels that make up an image. This would mean that there would be no need for BMP images nor OpenCV in the team’s image processing algorithms.