Heated Victim Detection using Vision in RCJ Maze Simulation

Heated victim detection is one of the things that the TFA Junior Robotics Team intends to explore following the 2020 RCJ Rescue Demonstration Competition. The team had previously used vision to detect only visual victims. Although the rules have not been released yet, the TFA Junior Robotics Team is keen on getting a head start, and honing their image processing skills.

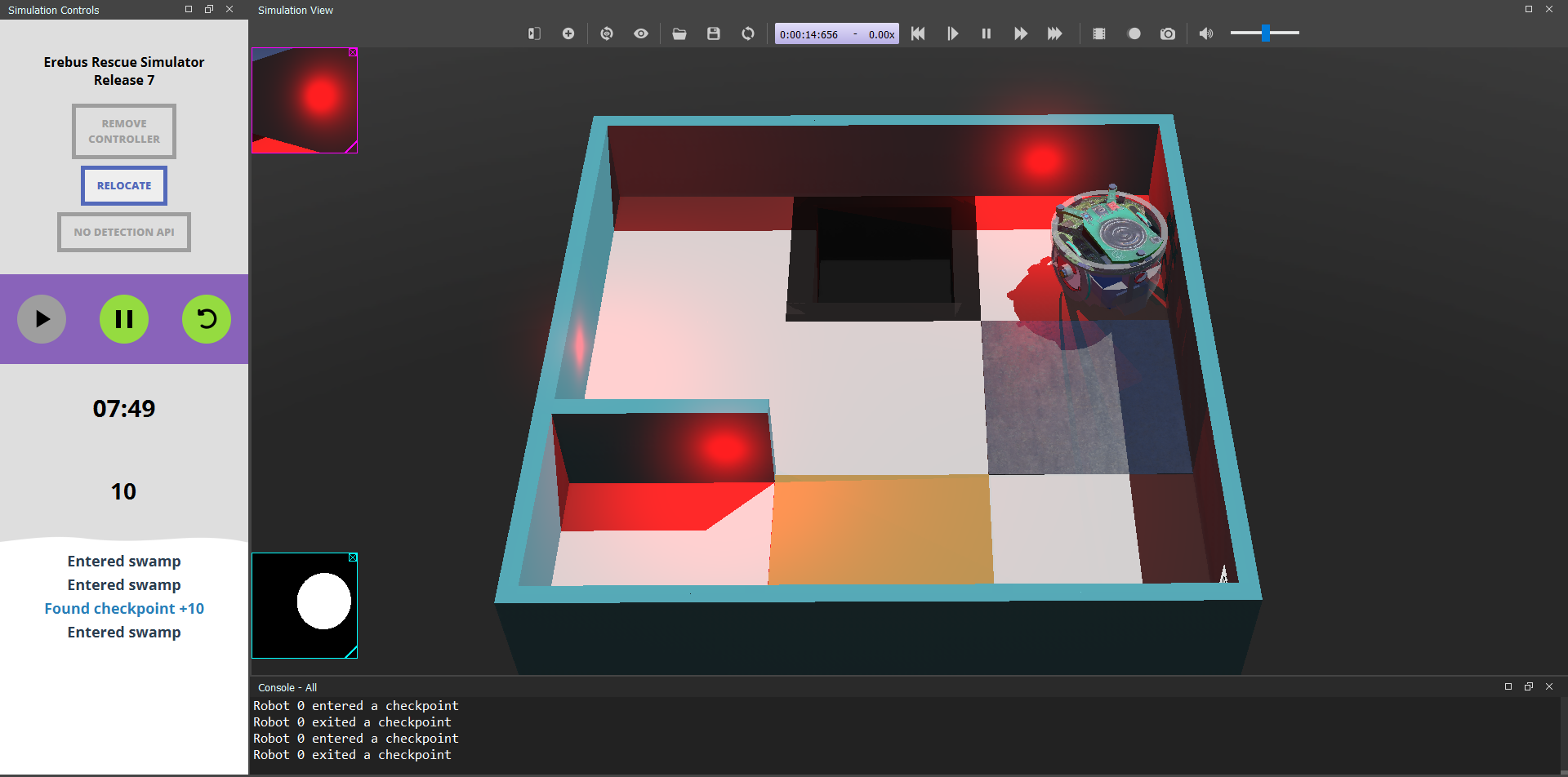

By detecting a red circle, the TFA Junior Robotics Team can possibly detect a heated victim from over a few tiles away. This is because the side facing heat sensors can not detect victims facing forward. However the cameras are in the front, which enables a direct view of approaching heated victims. By implementing this type of strategy, the TFA Junior Robotics Team has the advantage of being able to detect victims in a more optimized way. The team can optimize their mapping algorithm and optimize their time in the maze by reaching victims faster.

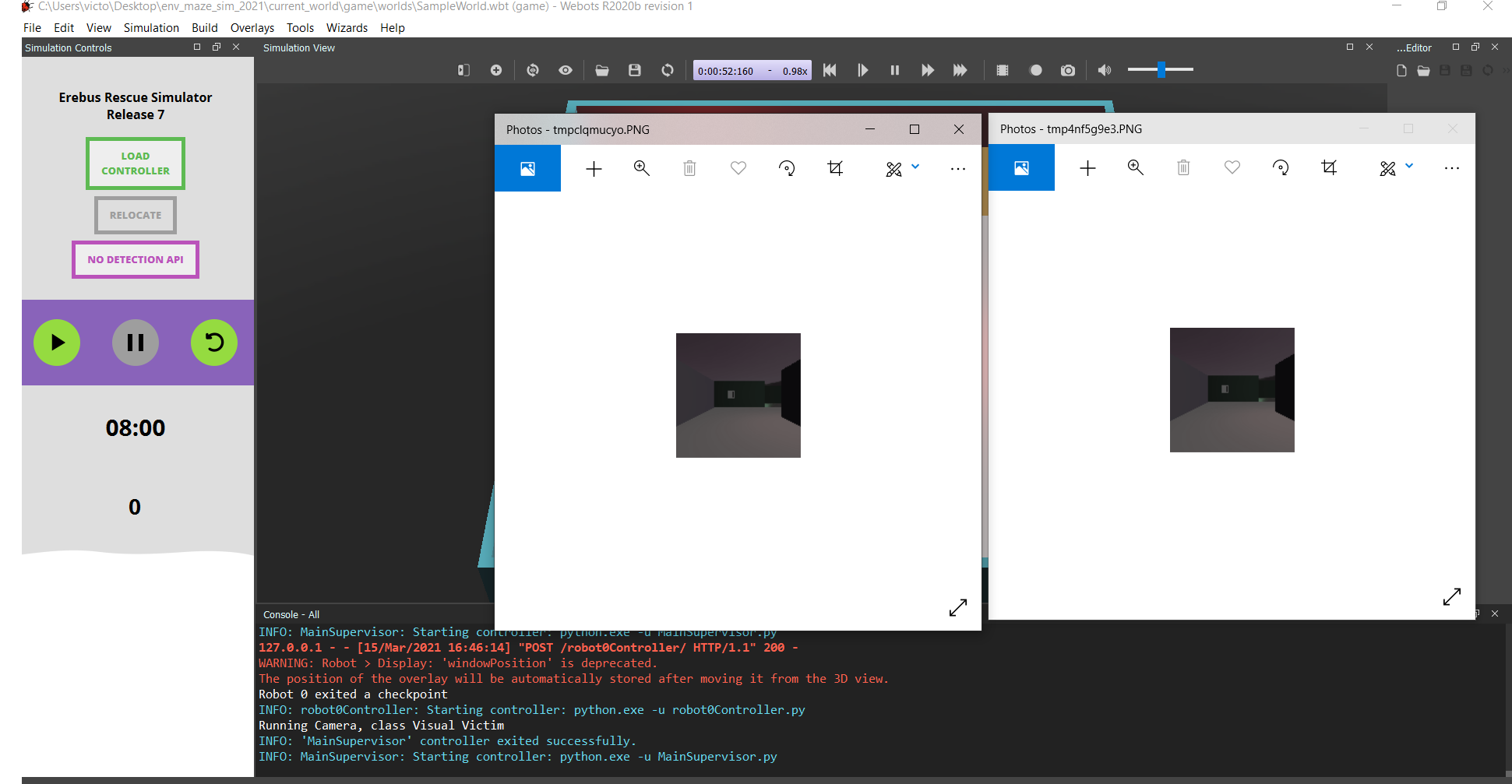

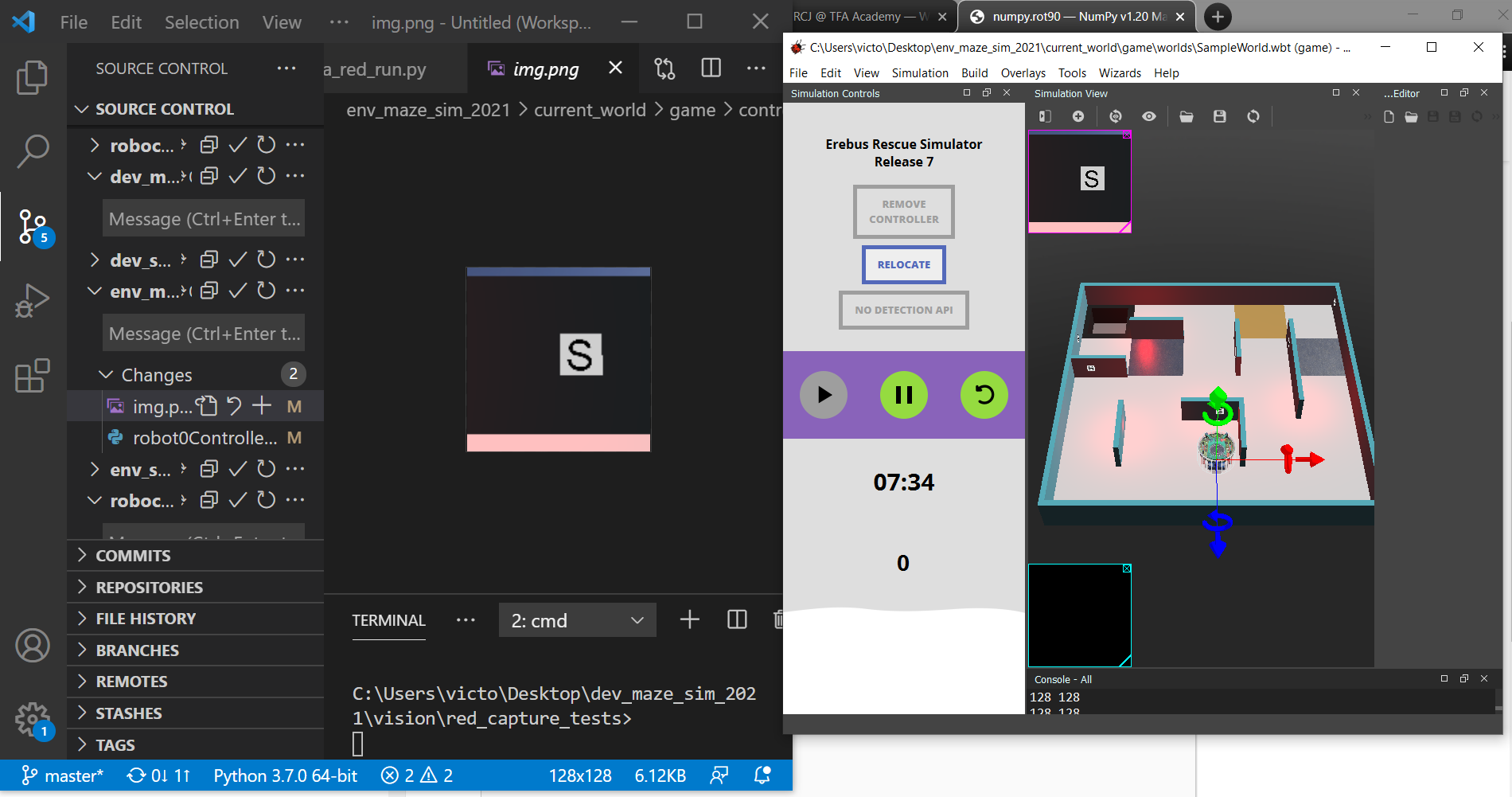

The original plan was in reading an image and using OpenCV to detect the red circles. However, after applying a mask, the results were unexpected. This can be seen in the image below.

After realizing that the results were unexpected, the team investigated the situation. The first thing that the TFA Junior Robotics Team attempted was in writing out the image array into a new image. In the above image, you can see the new image after reading the image data. One can also see that the team tested their process on the Windows photo app.

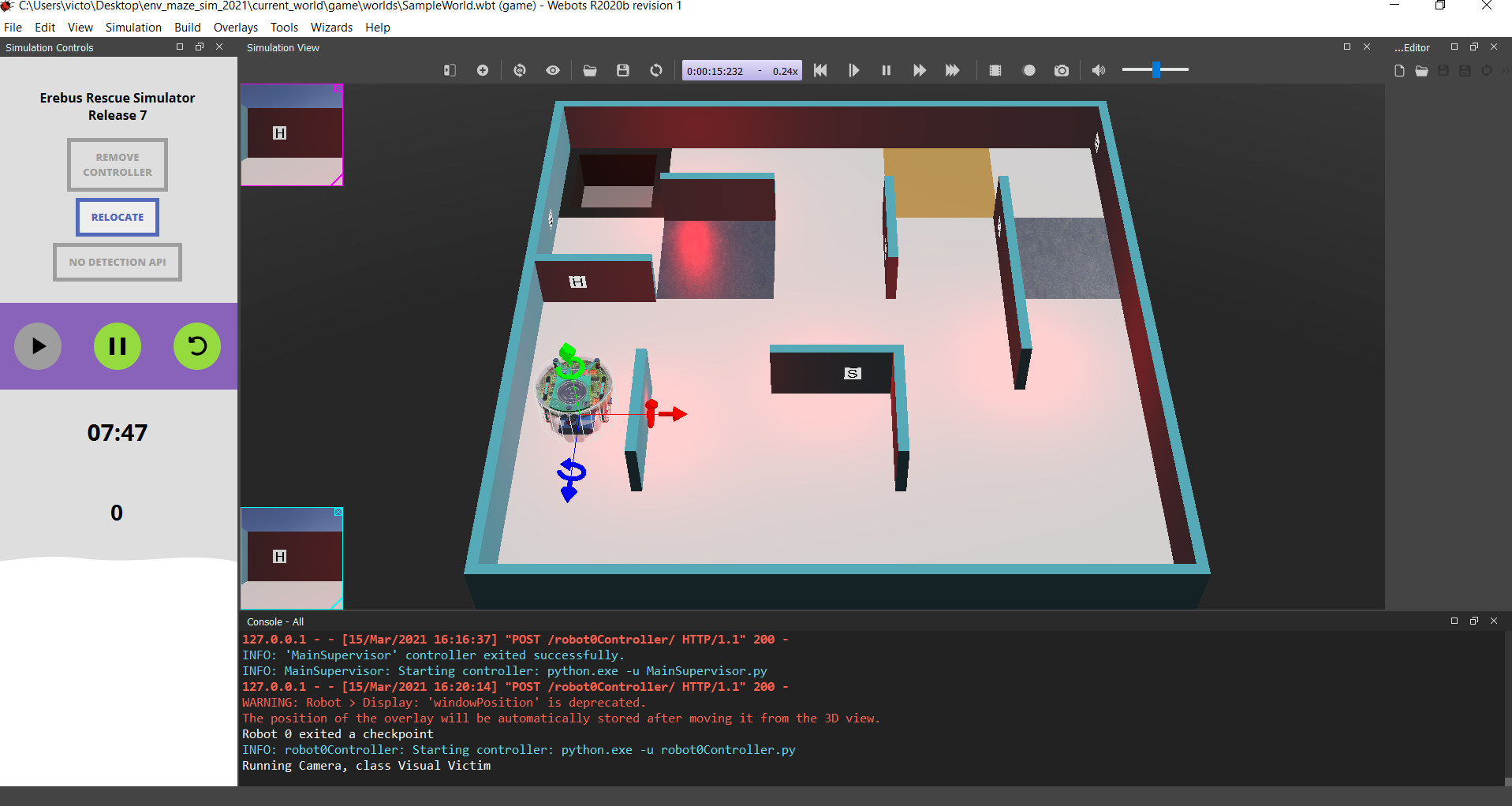

The new image was all dark, and seemed to only have 1 channel. Therefore, the team went back to the drawing board. From there, the team brainstormed and determined that they should attempt to read the image array data into another file. This took many attempts, as seen in the photos below…

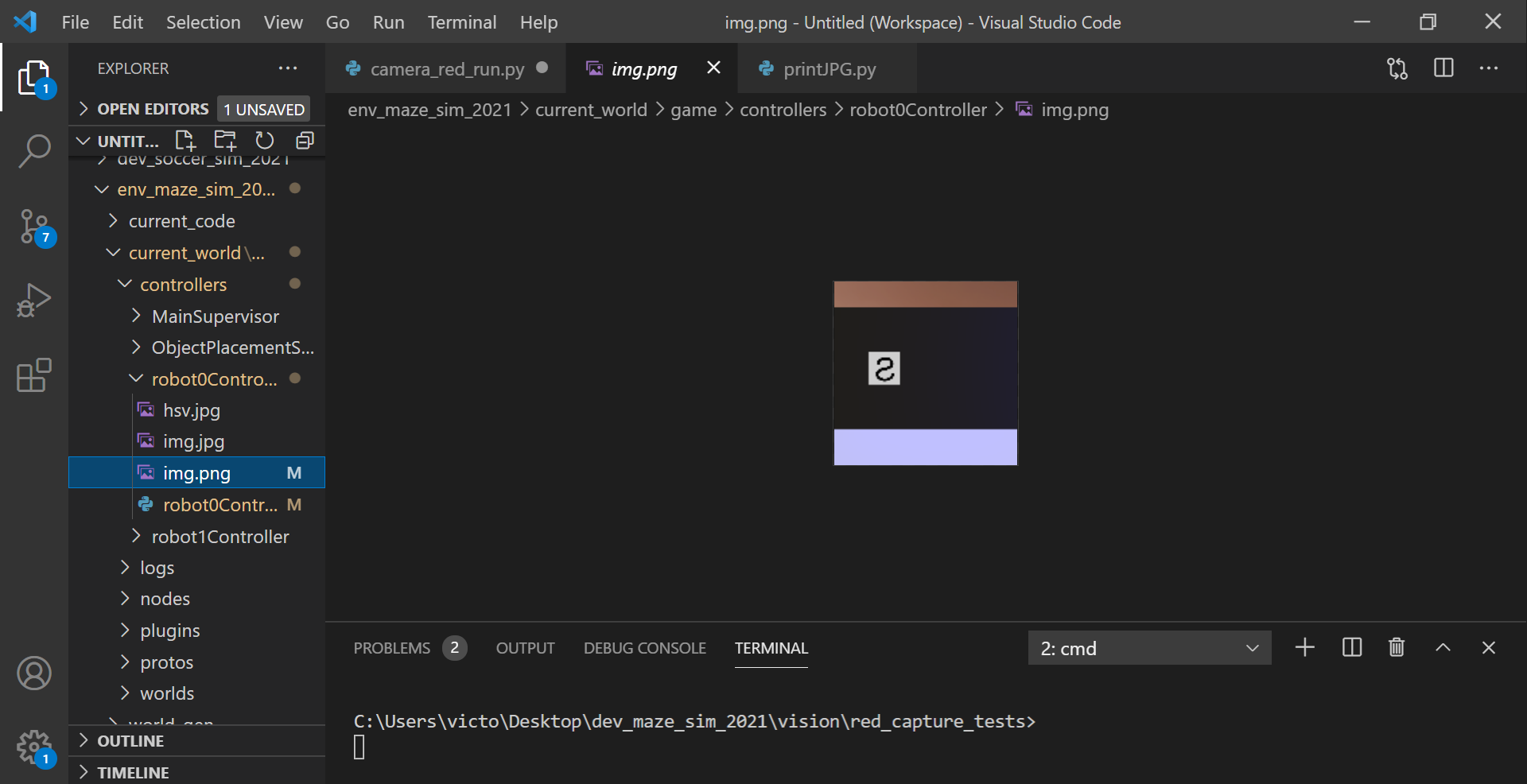

In the above photo, you can see how the image channels are mixed up, resulting in different colours. The TFA Junior Robotics Team determined that this was from reading the image out in an incorrect format. The team tried various OpenCV methods, but decided that it would be best to try using a different algorithm. The team was also interested in learning different ways to image process without OpenCV. Hence, the team decided to simply use the given image data array instead of OpenCV. From there, the team turned to the Python Image Library (PIL) to write it to file to view.

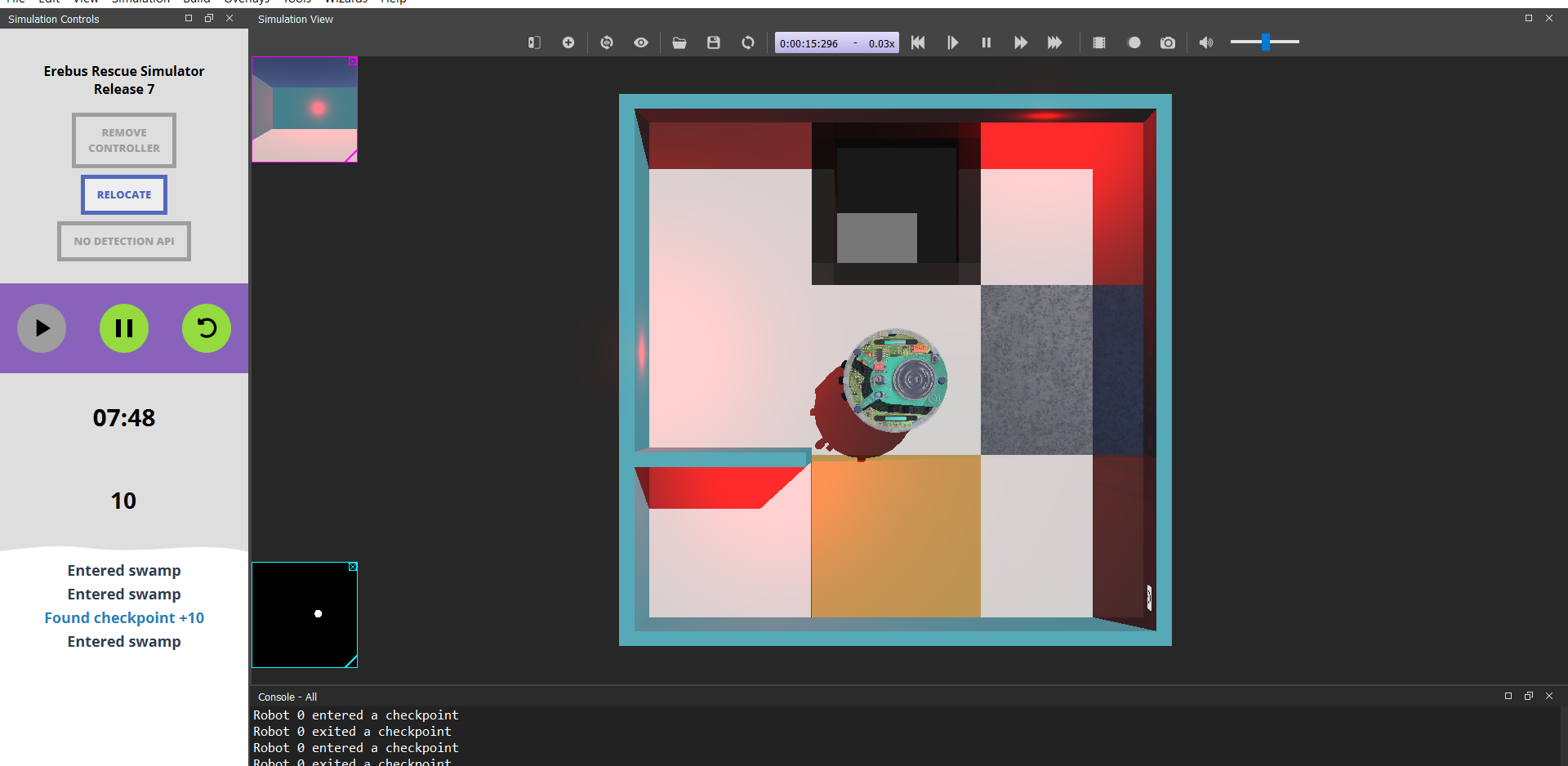

After correcting the colour, the team found that the image was upside down. Therefore, the team created an algorithm to flip the image data across the centre line. The result was a righted image as shown below.

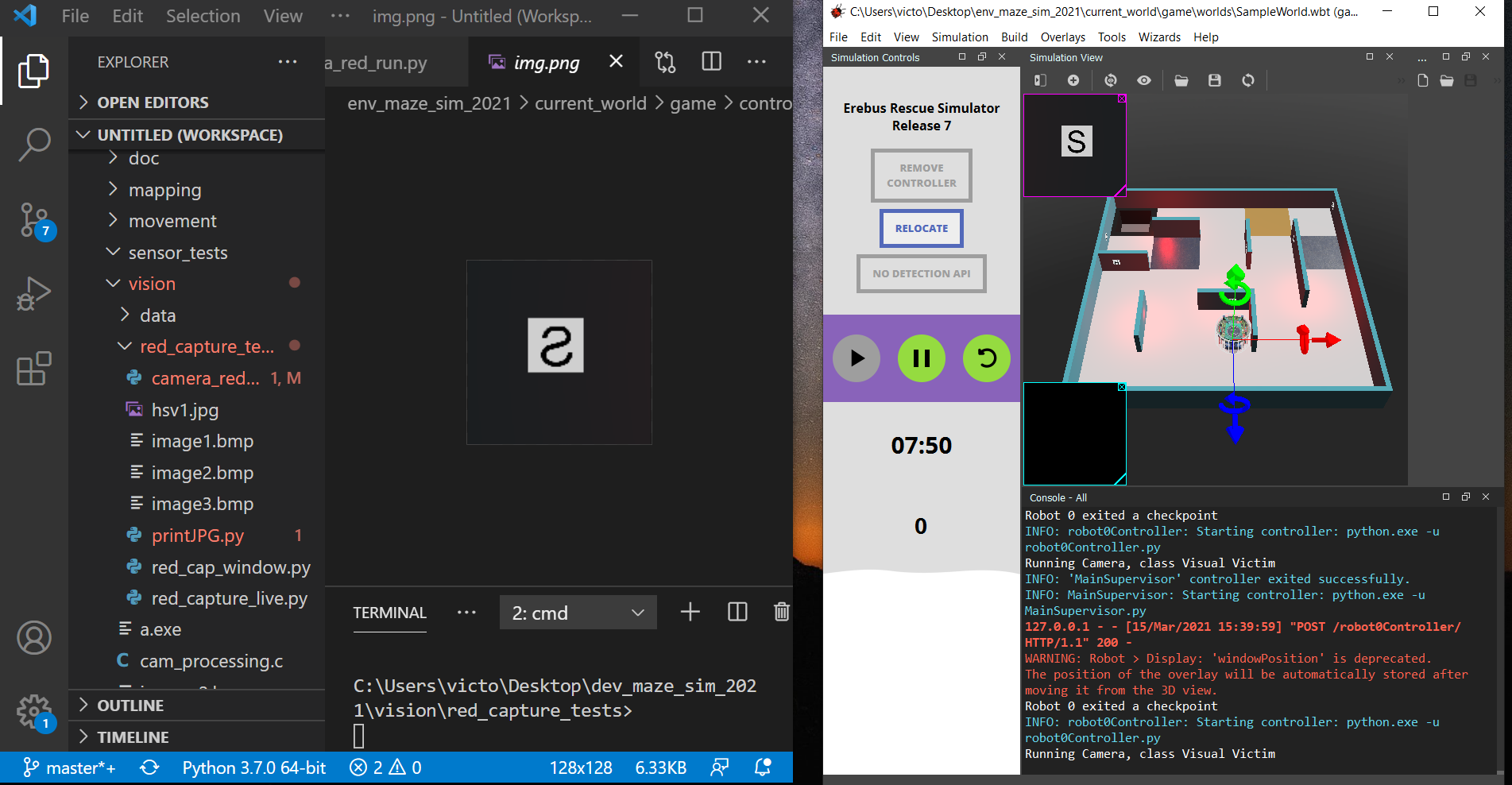

As seen in the above photo, the team proceeded to display the image via the display function. The team did this by reading the file that was previously written by the Pillow Library.

Following this, the team proceeded to begin creating and testing a mask. Through writing a mask algorithm that detected the highest and lowest thresholds for the red mask, the team was able to slightly detect the red image.

From there, the team played around with both the output and the algorithm to see which methods would find the red the best.

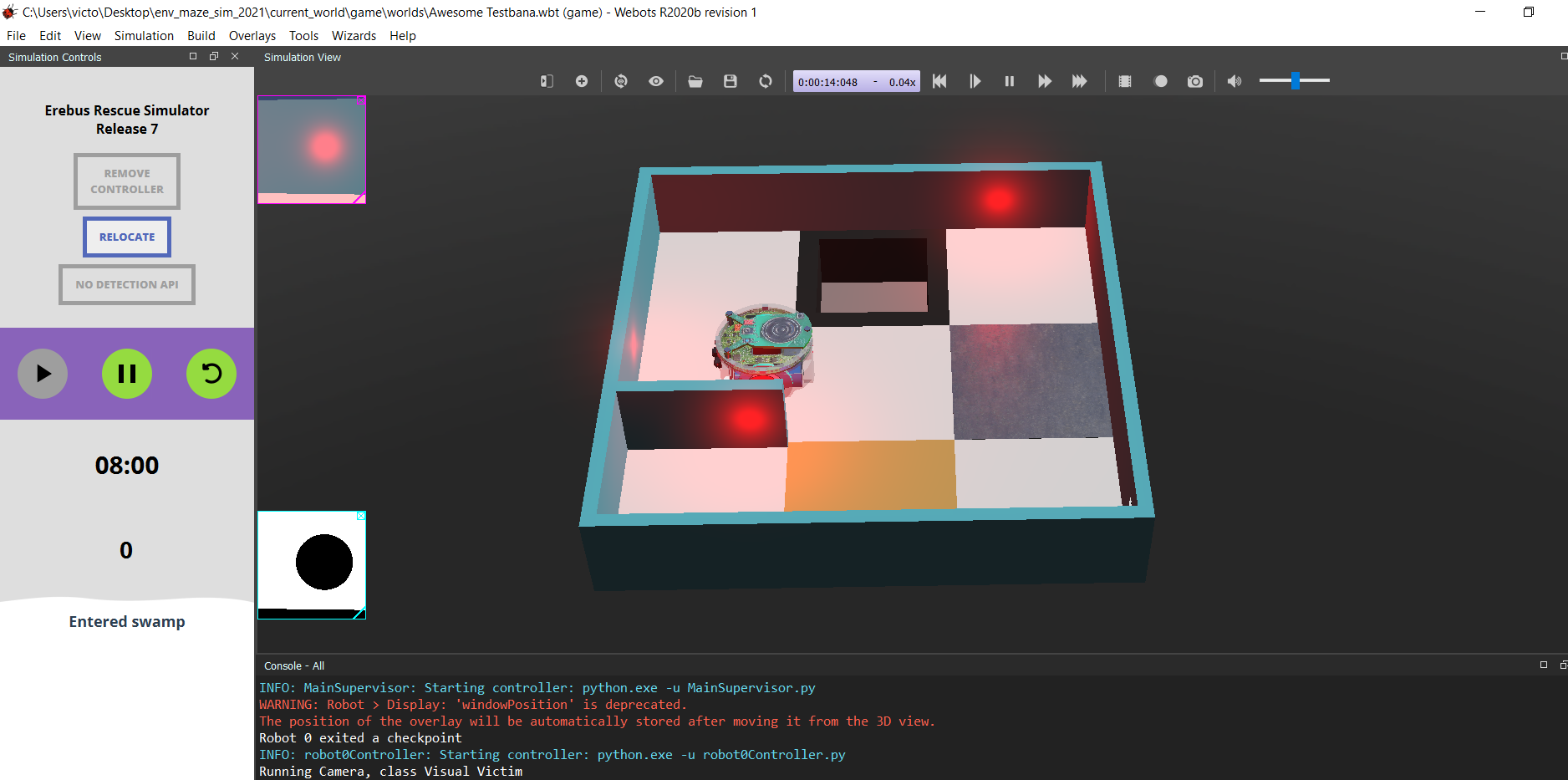

The end result was an algorithm that found the red pixels and displayed them onto a mask. This proved that it was possible to detect colours in the RCJ Rescue Simulation Demonstration Platform.

UPDATE: As of March 28, 2021, there seems to be no heated victims in future competitions. However, this algorithm may be used in detecting the new hazard signs. The new rules can be found here.

Here is a close up of the red detection and the resulting mask.